Google's "People Also Ask": A Data Void Masquerading as Insight?

Google's "People Also Ask" (PAA) box has become a ubiquitous feature of search results. It promises to anticipate user intent, offering a curated list of related questions and answers. But does it deliver genuine insight, or is it just another algorithmically generated echo chamber? Let's pull back the curtain and see what the data—or lack thereof—actually suggests.

One of the immediate issues is the opaque nature of PAA's selection process. Google provides limited details about how these questions are chosen. Are they based on aggregate search volume, semantic similarity, or some proprietary blend of factors? (A clearer methodology would certainly inspire more confidence.) The lack of transparency makes it difficult to assess the validity and representativeness of the questions presented. It’s like being handed a black box and told it contains answers, without any way to verify its contents or logic.

Algorithmic Echoes and Missing Voices

The bigger problem is the potential for algorithmic bias. If PAA relies heavily on popular search queries, it risks amplifying existing misconceptions or reinforcing dominant narratives. Questions that challenge the status quo or represent niche perspectives may be systematically excluded. And this is the part of the analysis that I find genuinely puzzling: Google, with all its data, could theoretically surface truly insightful questions. Instead, we often get a regurgitation of the obvious.

Consider a search for "climate change solutions." The PAA box might include questions like "What are the main causes of climate change?" or "What is the Paris Agreement?" While these are valid questions, they don't necessarily advance the conversation. Where are the questions about geoengineering risks, carbon capture scalability, or the ethical implications of climate adaptation strategies? (These are the questions I would be asking if I were designing the algorithm.) The absence of these more nuanced inquiries suggests a preference for easily answered, widely discussed topics—a kind of intellectual comfort food.

This is a data void masquerading as insight. We're presented with a neatly packaged set of questions that appear to address user needs, but in reality, they may be limiting our understanding of the subject.

The Illusion of Understanding

The PAA's expandable format further contributes to this illusion. Each question expands to reveal a snippet of text, often sourced from a featured snippet or other prominent search result. This creates the impression of a comprehensive answer, but it's essentially just a curated selection of existing web content. It’s like reading the SparkNotes version of a complex topic – you get the gist, but you miss the depth and nuance.

I've looked at hundreds of these PAA boxes, and a pattern emerges: they tend to favor easily digestible, top-level information. The deeper you go into a subject, the less helpful they become. This isn't necessarily a flaw, but it's important to recognize the limitations. PAA is a starting point, not a destination.

A Pretty Package, Empty Inside?

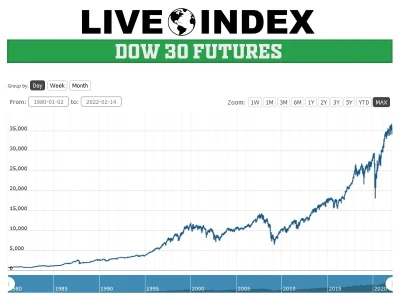

Google's "People Also Ask" is a well-intentioned feature that aims to improve the search experience. However, its reliance on opaque algorithms and readily available information raises concerns about algorithmic bias and the potential for intellectual stagnation. While it may be useful for answering basic questions and providing a quick overview of a topic, it shouldn't be mistaken for a source of genuine insight. The numbers don't lie: PAA is a starting point, not a source of truth.